Bug Causes Damage to American Carrier

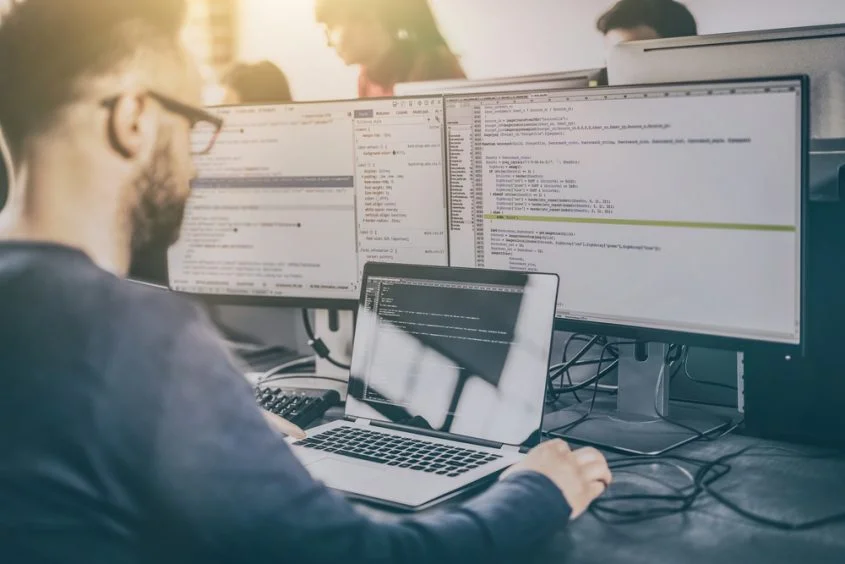

"Bug Causes Losses for American Carrier" Southwest Airlines points the finger at a flaw in its network firewall. After the bug that caused the cancellation of more than 16,000 flights in December, the resilience of the company's computer system is being questioned. In the United States, after a technological glitch led to the temporary suspension of all Southwest Airlines flights, concerns are being raised about the resilience of the American carrier's IT infrastructure. Headquartered in Dallas, Texas, the US company attributed the bug to a failure of a network firewall distributed by a supplier, causing a temporary loss of connection to key systems. In a statement to Reuters, the carrier said that flights had been suspended as a precaution, adding that there was no evidence of a cyber attack. It also refused to identify the supplier and did not address why this failure was not part of the company's planning. Although the exact cause is unclear, some industry experts have questioned why Southwest Airlines' systems didn't include more redundancy. The carrier has been under fire since a software problem during the Christmas vacations led to more than 16,000 flight cancellations, disrupting the travel plans of 2 million customers, and leading to a loss of more than a billion dollars. "This would indicate that resilience is not adequately addressed in their systems," said Eric Parent, private pilot and CEO of EVA Technologies, a cybersecurity company with offices in Canada, the US and Europe. "Some significant improvements should be considered to increase their maturity and ability to maintain operations." The continuation of the original article via Reuters can be read here.

Bug Causes Damage to American Carrier Read More "